24th of november 2025

The State of AI in B2B Marketing Report by

The Growth Syndicate

Key highlights:

- Most teams believe AI will help their work, yet current use lags behind potential, with benefits rated 8.8 out of 10 on average and execution at 6.4 out of 10, and only 26 percent rating execution at 8 or higher.

- Self‑reported knowledge sits between belief and execution, averaging 7.4 out of 10, with about half rating 8 or higher, which signals a steep drop from conviction to practical capability.

- Trust in AI outputs is moderate at 5.8 out of 10, so a verify‑before‑publishing workflow is the norm rather than the exception.

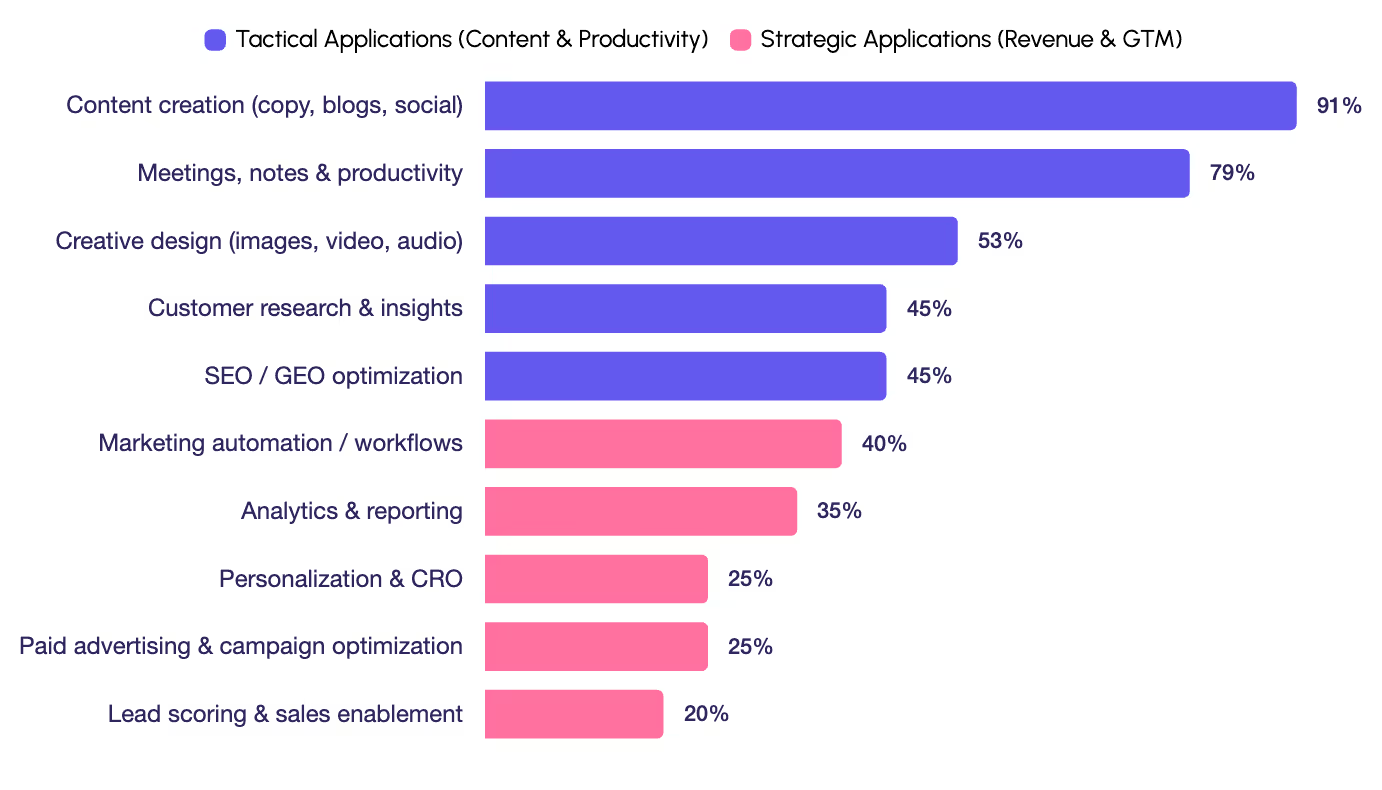

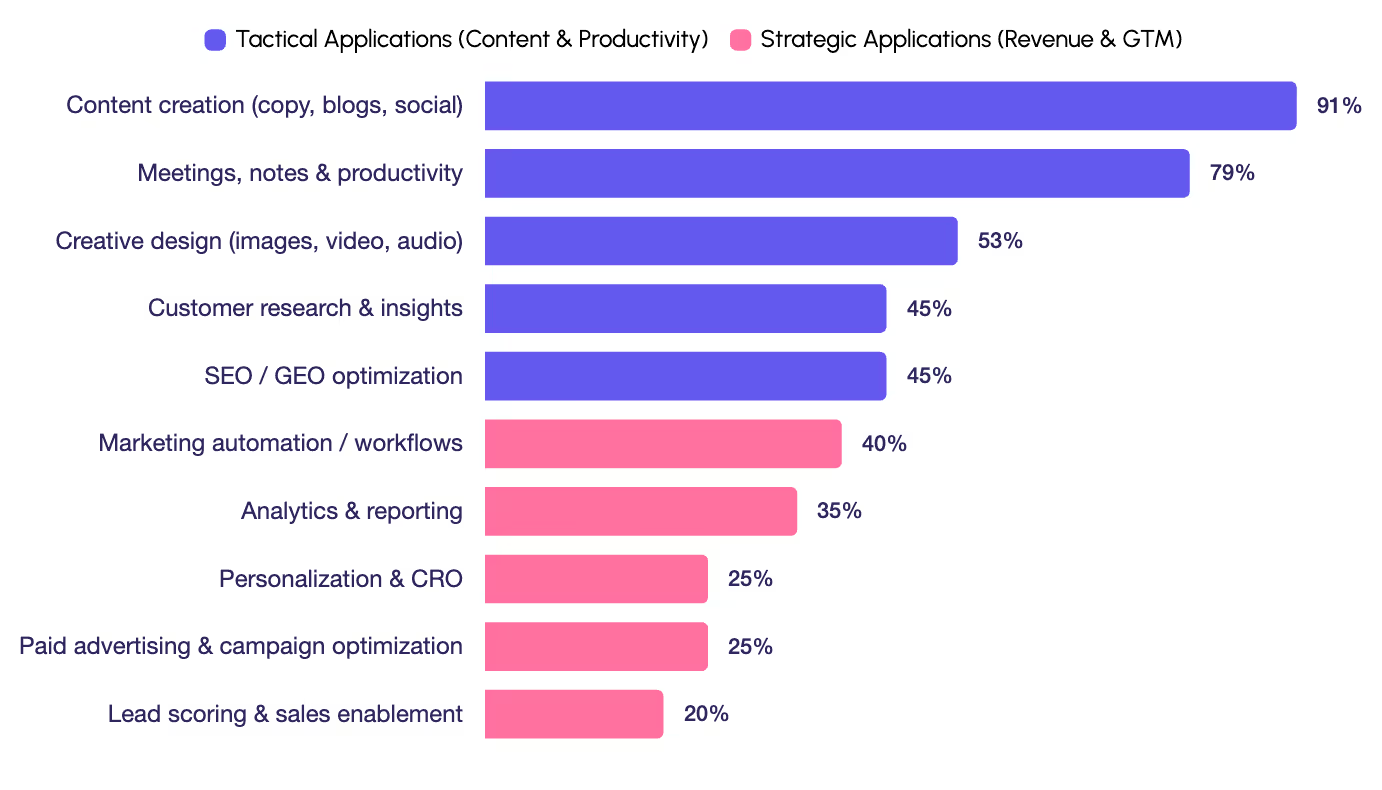

- Adoption concentrates on tactical tasks, with 91 percent using AI for content creation and 79 percent for productivity, while more strategic uses such as lead scoring at 31 percent and personalization near 25 percent trail significantly.

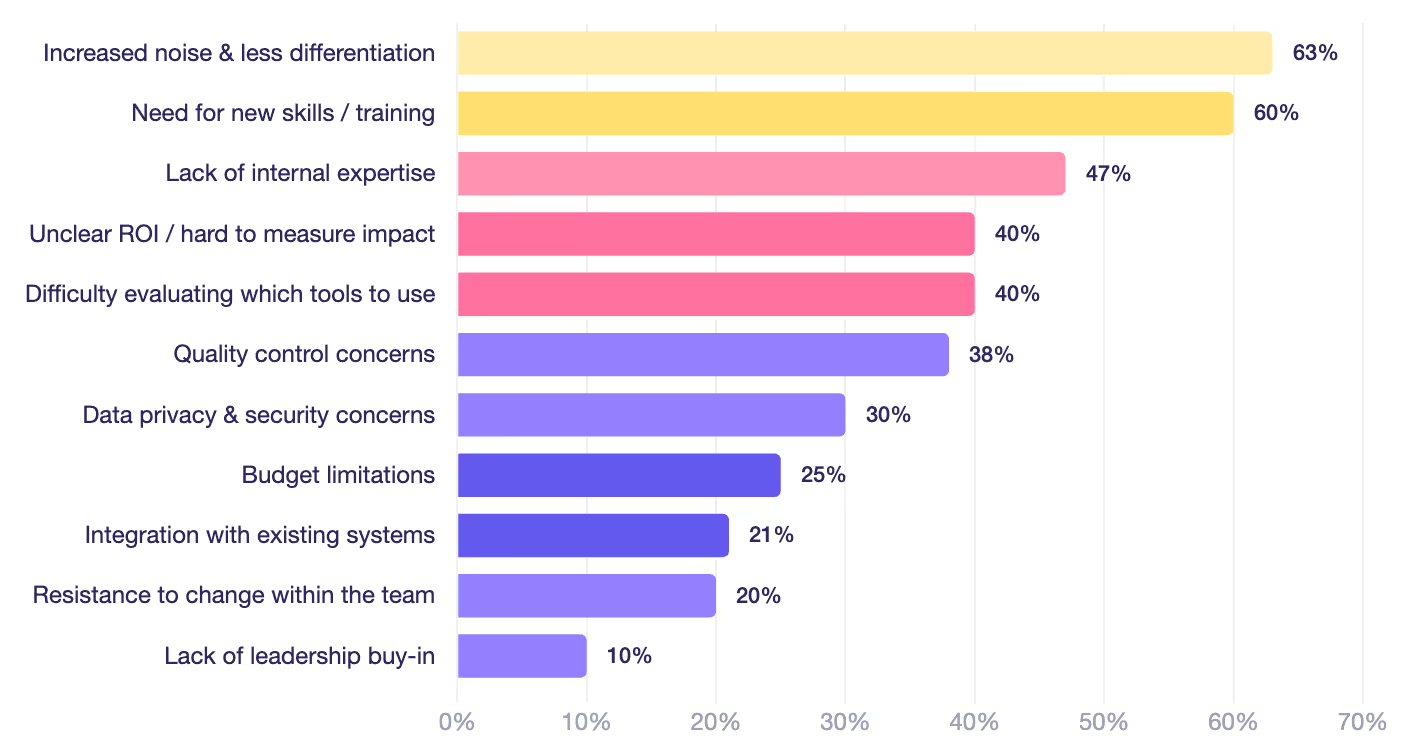

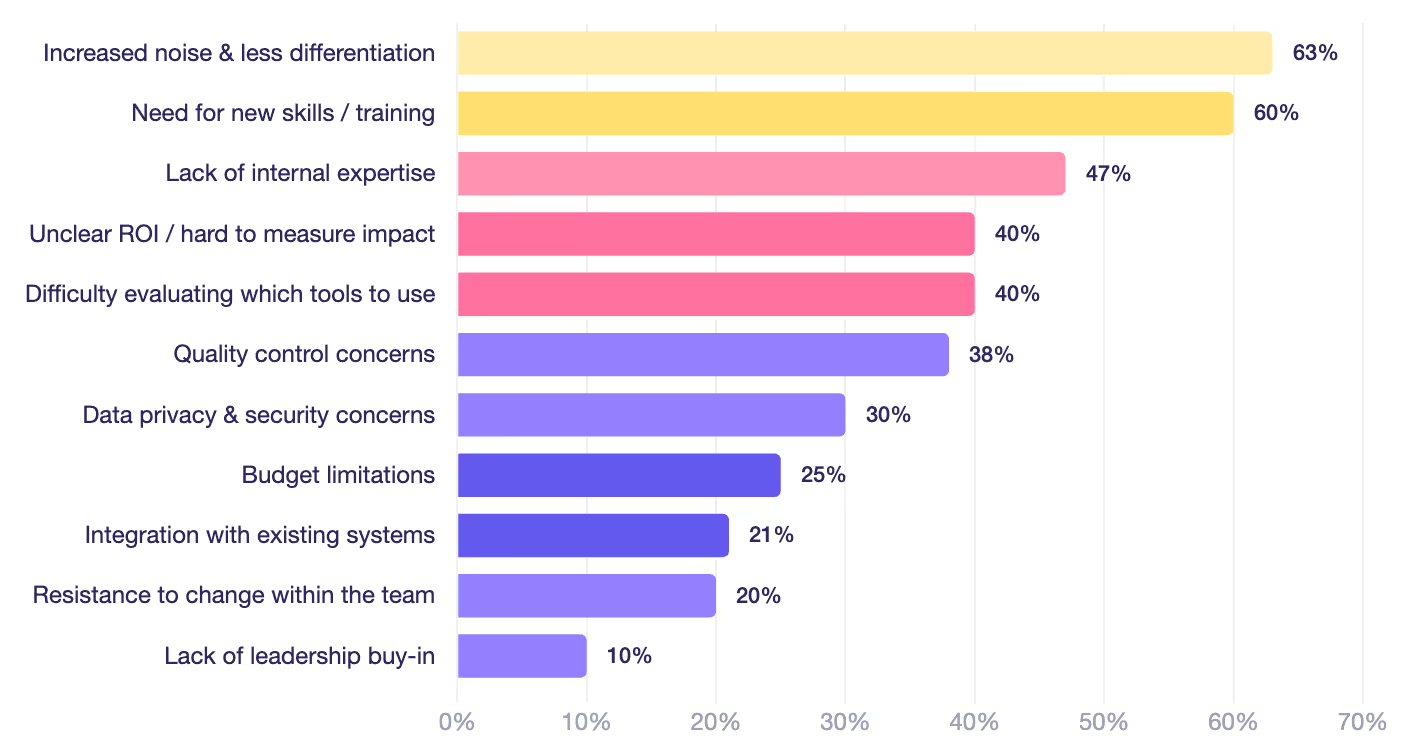

- Skill gaps are the primary brake on progress, with 60 percent citing the need for training and 47 percent citing a lack of internal expertise, while only 25 percent point to budget constraints.

- Many worry about sameness, with 63 percent concerned that AI increases noise and reduces differentiation as output volume rises.

- Governance is thin at this stage, with around 50 percent lacking formal AI policies and roughly 20 percent reporting no clear owner for AI adoption, which weakens consistency and measurement.

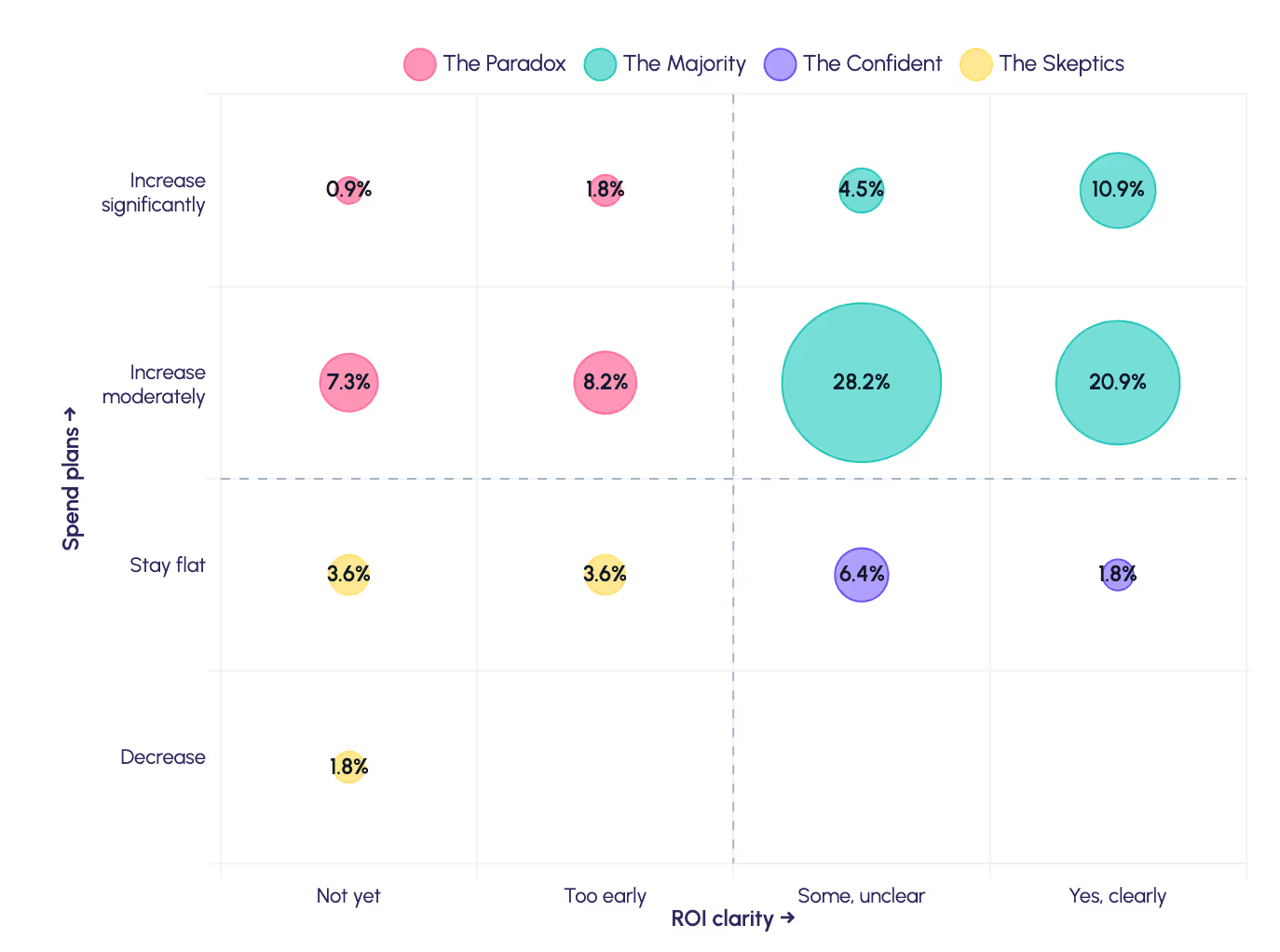

- Spending is rising without clear ROI visibility, as approximately 83 percent expect higher AI spend in the next 12 months while about 60 percent have no dedicated AI budget line.

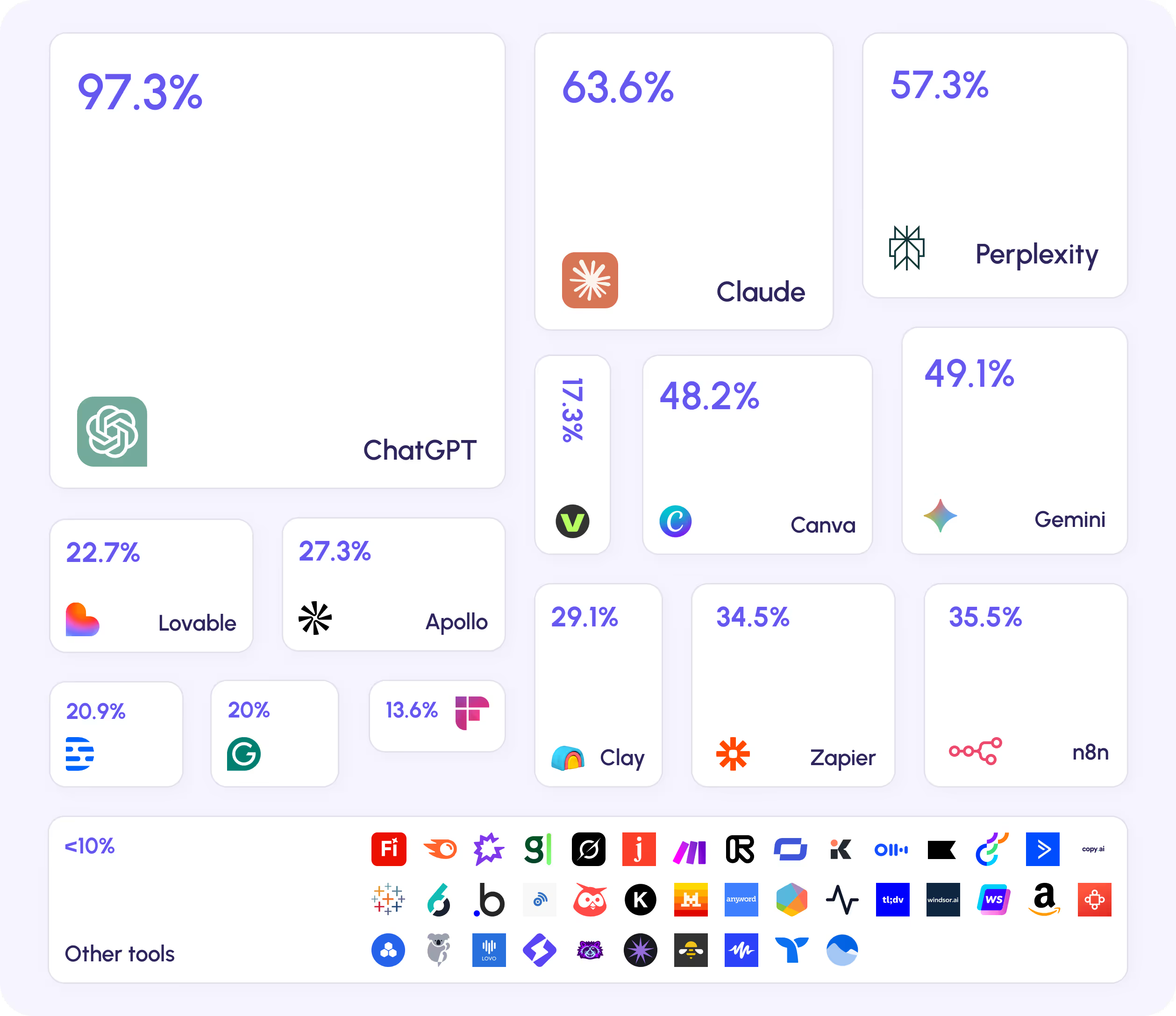

- Tool usage clusters around general‑purpose systems, with reported adoption of ChatGPT at 97 percent, Claude at 64 percent, Perplexity at 57 percent, and Gemini at 49 percent, while niche applications see far less use.

Introduction

Why we wrote this

What this report covers

- The confidence-competence gap. Why marketers believe AI is critical but can't extract value

- The differentiation paradox. Why most think AI creates sameness, not competitive advantage

- Usage patterns. What teams are actually doing versus what they say they're doing

- Barriers. Why adoption is high but implementation is shallow

- The measurement crisis. Why 70% can't prove ROI but keep increasing spend

- A graphic map of the AI tools most commonly used by our 110 respondents, highlighting where adoption concentrates around core platforms and how niche apps show up.

- What separates teams that scale from teams that stay stuck

- Real implementation lessons from founders, fractional CMOs, and growth leaders

- Why some practitioners achieve high AI capability while most remain stuck experimenting

- Patterns across successful integrations (not vendor case studies with perfect results)

- The specific decisions we've made about where AI creates value and where it doesn't

- Boundaries we've set around what AI shouldn't touch

- Three organizational paths based on where you're starting from

- Two critical capabilities every marketer needs to develop

- The choice between creative strategic leadership and technical AI orchestration

- How to avoid getting stuck in the dangerous middle

What we're actually seeing

1. Our survey: How B2B marketing teams view and use AI

This represents the context where most marketing teams operate. Small groups, constrained budgets, limited infrastructure, real tradeoffs about where to invest time and attention.

Here are some key patterns we identified:

- There's considerable distance between confidence in AI's potential, knowledge of how to use it effectively, and actual execution capability. The drop-off at each stage is significant.

- When you ask practitioners directly whether AI makes differentiation easier or harder, more say harder. That's worth understanding given how much of the adoption narrative assumes AI creates competitive advantage.

- Usage concentrates heavily on content creation and productivity tools while strategic applications like lead scoring and personalization lag substantially.

- The main barrier to deeper adoption isn't budget constraints, it's skills and expertise.

- Most teams report increasing AI spending over the next twelve months despite being unable to clearly measure returns from current investments.

They might represent natural stages in how organizations build new capabilities over time. However, they do suggest that the path from experimentation to systematic value creation is longer and more complex than early adoption narratives typically acknowledge.

The belief-reality gap

To what extent do you agree that AI developments will be beneficial to your work?

How up to speed do you feel with developments in AI?

To what extent do you agree that AI developments will be beneficial to your work?

How well do you feel your team leverages AI in its work today?

The belief-execution gaps between roles

In short: belief is a constant, while execution changes based on operating model. Fractional leaders execute highest on average, while VP-level leaders show the most distance between conviction and what teams can do today.

The differentiation paradox

What impact do you expect AI to have on differentiation in B2B marketing?

Trust in AI outputs: the "verify everything" mindset

Content dominance, strategic lag

Where teams are actually using AI?

Skills over budget

Skills, not budget: what actually blocks AI adoption

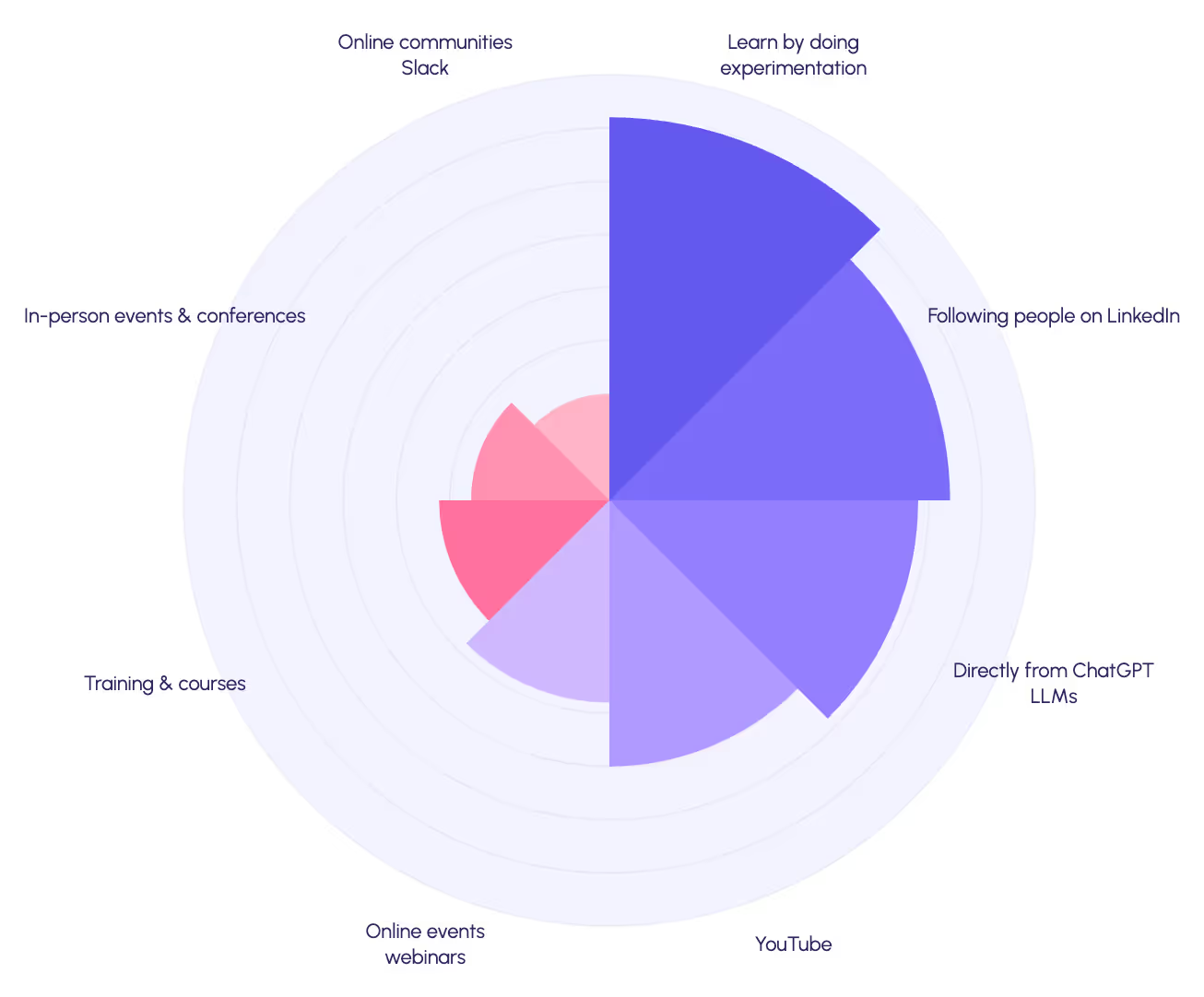

How marketing teams are learning AI

- ~60% have no dedicated AI budget

- ~50% have no formal AI usage policies

- ~20% have no one formally owning AI adoption in their organization.

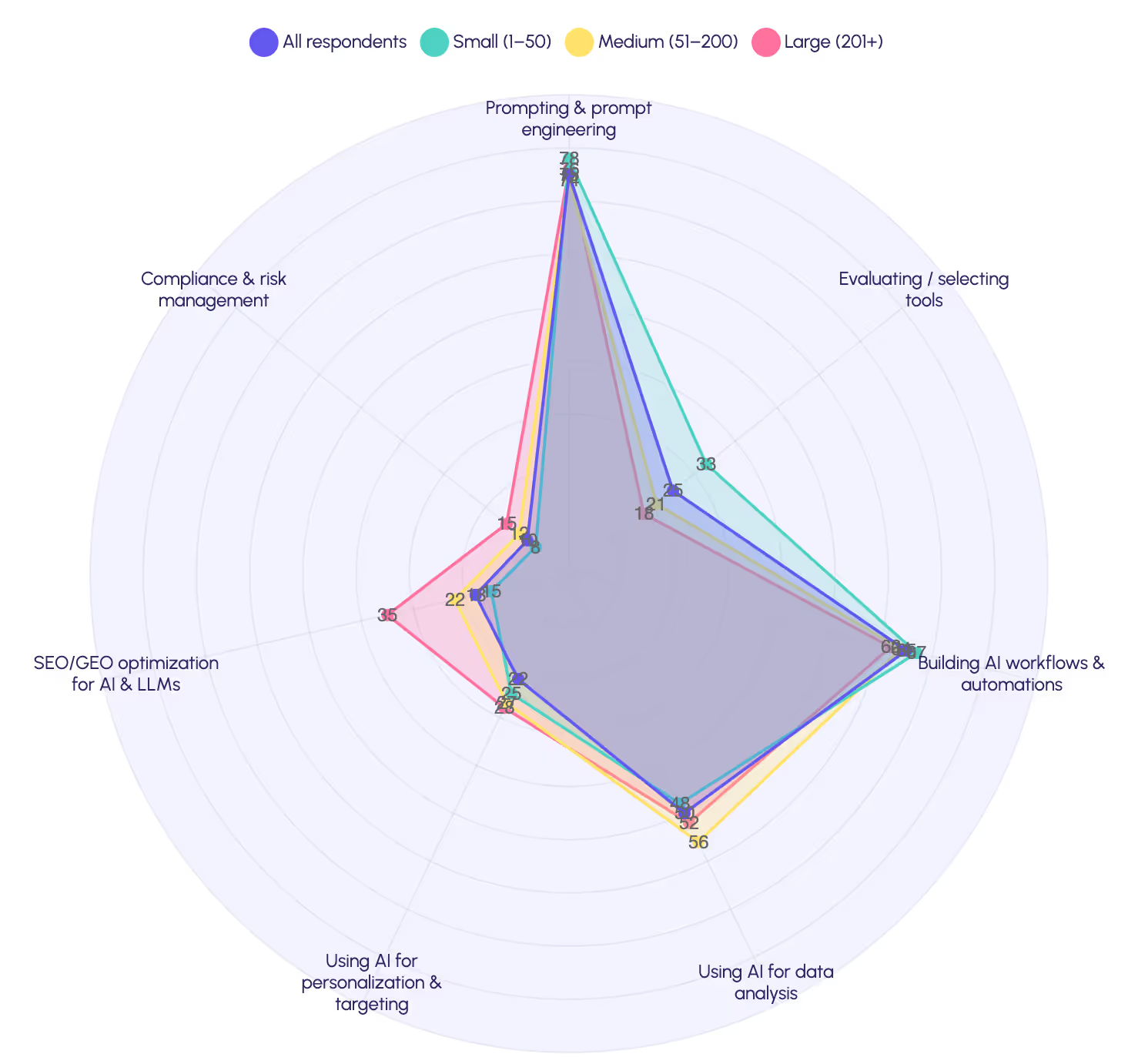

AI skills being developed by company size

Budgets, ROI, and who actually owns this

About 83% plan to increase AI spend (moderately or significantly), while roughly two-thirds say ROI is not yet clearly measured.

Do you have formal AI usage guidelines or policies in place?

2. AI tool map

3. Expert perspectives

- Maja Voje (Go-To-Market Strategist and Author)

- Jean Bonnenfant (Head of Growth at Lleverage)

- Bernardo Nunes (Data & AI Transformation Specialist)

- Anuj Adhiya (Expert-in-Residence at Techstars and Author of "Growth Hacking for Dummies")

- Lisa Brouwer (Principal at Curiosity VC)

- Wes Bush (CEO & Founder of ProductLed)

- David Arnoux (Fractional CxO & Co-Founder at Humanoidz)

- Baptiste "Baba" Hausmann (Founder at Baba SEO)

- Ricardo Ghekiere (Co-Founder at BetterPic and BetterStudi)

- Iliya Valchanov (CEO at Juma, formerly Team-GPT)

- The capability chasm: Teams aren't progressing along a smooth adoption curve. Instead, they're clustering into distinct maturity stages with fundamentally different operating models. The distance between traditional, augmented, and automated teams appears to be widening rather than closing.

- Execution to orchestration: The role of the marketer is changing from doing the actual work to designing systems that execute it. This changes what skills matter, how teams are structured, and what separates high performers from those at risk of obsolescence.

- Infrastructure as prerequisite: Speed and automation only create advantage when built on deliberate choices about data quality, integration architecture, and human oversight. Without this foundation, AI adoption adds complexity without compounding value.

The capability chasm

- Traditional teams have humans executing workflows end to end. Campaigns get assembled manually. SDRs research accounts, write outreach, and update CRMs by hand. This is still where most teams operate.

- Augmented teams have humans working alongside copilots. AI drafts content, summarizes calls, proposes next actions, and updates systems. Humans approve, steer, and maintain quality. The work moves faster, but the fundamental structure of roles hasn't shifted yet.

- Automated teams have interconnected systems handling execution. Humans design the system, set strategic direction, run quality checks, and invest their time in relationships and creative work. The role structure has changed.

"The teams that have been able to augment themselves will greatly surpass those that haven't. As long as you have the right guardrails and maintain taste and strategic thinking, it's a powerful tool. I don't think the gap here is linear. I think it's going to be exponential."

"AI will turn B2B marketing from campaign-driven to always-on. Instead of launching quarterly campaigns, teams will run thousands of micro-tests in real time."

"Always-on programs tailor copy, timing, and channel at the account and contact level. AI drafts assets, proposes next-best actions, summarizes calls, updates the CRM, and surfaces recommended plays—while humans approve and steer."

"Revenue per employee is going to increase drastically at AI-first companies. The idea-to-experiment time is now shorter than ever. Your idea can be out there really fast, cut off or doubled down on quicker than ever."

Skills, not budget: what actually blocks AI adoption

"AI is significantly compressing the time it takes to go from idea to execution to iteration. Campaigns that once required weeks can now be launched in days, and with fewer people. In crowded software markets, the teams that move fastest and can test, learn, and scale quickly will gain a strong advantage."

"People think AI is perfect and should be perfect. It's not. It evolves over time, and it's something you need to start perfecting your craft for today, not tomorrow."

Execution to orchestration

Where teams are actually using AI?

"It's no longer about executing the tasks. It's about designing the system that does the work. Make your job obsolete by building the system, then lead it. We still need humans in the loop for taste and strategy."

"Marketers who outsource all the thinking to AI will experience metacognitive decline. Struggle with the problem for 10–20 minutes first, then use AI as the double checker."

"Study prompt engineering. It's mastering the creative brief for machines. Move beyond single prompts to building autonomous campaigns. Document and template everything, because that's how you automate it."

"Start with an AI Content & Ops Copilot tied to your CRM/MAP. Pull a minimal account/contact view, use a versioned knowledge base, generate on-brand assets with citations, summarize calls, draft follow-ups, update CRM, recommend next steps. Humans approve and everything is logged."

"Time savings often don't translate into impact because teams fail to redesign the process. Decide what to do with the 'free time' and optimize the review process, otherwise there's almost no impact."

"Marketing managers become AI strategy leads. SDRs become sales AI orchestrators. Content teams become content AI architects and evaluators."

"We're moving from 70% admin and 30% sales to an 80/20 inversion, with AI executing the repetitive work in the background."

"Appoint one owner for 3–6 months. Do weekly usage reviews. Commit long enough to learn, then course-correct. Leaders need to be in the tools, adaptable, and strong on human connection."

"Move fast with compressed idea→execution→iteration cycles, but pair it with clean data, integrated stacks, and governance. That's where speed turns into durable advantage."

Infrastructure as prerequisite

"AI will start to act more like ambient intelligence, a quiet layer connecting internal and external context. The real value lies in turning that context into proactive understanding and more confident, faster decision‑making."

"Once you're shipping value and your data is clean and labeled, add predictive lead qualification as Phase 2 with explanations for scores, a sales feedback loop and holdout tests to prove incremental lift."

“SMBs typically opt for out-of-the-box, enterprises build their own in-house solutions. Just like regular software, nothing new there.”

"AI takes PLG from static to adaptive. Imagine every user getting a personalized onboarding flow, the right upgrade nudge at the perfect time, and in‑app content tailored to their exact use case—all without a sales call."

"Teams should deepen their understanding of search behavior across evolving platforms… Treat 'GEO' as the same core discipline—search behavior—expressed in new UX."

"Operational efficiency gains… Enhanced pipeline contribution… Capability building: upskilling talent so that AI isn't just a tool but… a core part of the process and way of working."

4. Where we stand on AI

The principles that guide our work with AI

Trying to track everything is a losing game. What is realistic is having a clear philosophy for how you use AI: a small set of principles that guide how you learn, where you adopt, and how you apply it to commercial work.

We use AI extensively, but not indiscriminately. We have explicit boundaries on where we won’t use it, even if we technically could.

Principle #1:

Human strategy sets direction; AI handles execution

- 66% of marketers say human oversight is essential for AI deployment

- 71% cite AI's lack of creativity and contextual knowledge as a fundamental barrier

- Average trust in AI outputs sits at 6-7 out of 10, which represents moderate confidence, but not high

"AI is not about delegation. It's about implementing, amplifying, and distributing the core value that you create. If you delegate strategy, you're no longer performing your role."

"AI should handle execution better than humans ever could. But it should never take over the experience, the strategic thinking, or the authenticity. Those stay human."

.avif)

Principle #2:

Scale through intelligent automation, not headcount

- 60% of founders in our survey have stopped hiring roles they previously would have filled, choosing AI-assisted productivity instead.

- AI-native startups like Swan AI are generating $10M in pipeline per employee, an order of magnitude beyond traditional benchmarks.

"The old model was simple: more clients meant more hires. AI breaks that. We automate the time-consuming, low-impact work and redirect that capacity to strategic execution. As a result, we can serve more clients or deliver better outcomes without adding people."

Principle #3:

First-draft assistance, final-draft human refinement

"Bad thought leadership starts with bad thinking, not bad writing. An interesting insight will always survive a poor articulation. A hollow insight doesn't improve with better articulation. AI amplifies whatever you put in, value or slop."

Principle #4:

AI as creative sparring partner, not original thinker

"In a world where everything can be copied, the only thing that makes you different is your brand. Everything else in the next five to ten years will probably be replaceable. We use AI as sparring partner that helps us iterate and explore our own ideas."

.avif)

Principle #5:

Data discipline and privacy compliance are non-negotiable

Principle #6:

Outcome-focused deployment

- 70% of teams cannot measure their current AI ROI. Most teams are using AI because it's available, not because it demonstrably improves results.

- Time savings without process redesign creates zero business impact.

- Efficiency gains that don't translate to better outcomes or freed capacity are accounting fiction.

"If you can't measure whether AI improved the outcome, don't use it. Deploy where you can prove impact, abandon where you can't."

Our AI boundaries

The two skills that matter

Skill one: Get really good at what AI can't do

Skill two: Get really good at using AI

The dangerous middle

• Original thinking

• First principles

• Pattern recognition

• Authenticity

• Workflow design

• Process automation

• AI agent management

• Quality protocols

.svg)

.svg)